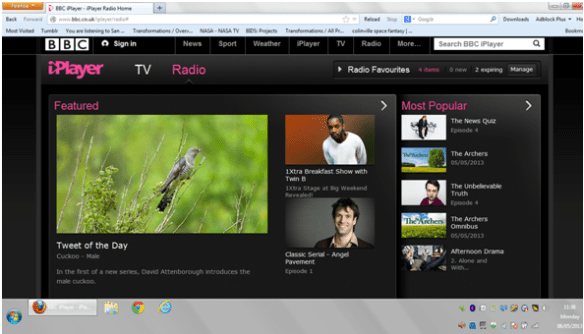

I’m at a meeting of the Virtual Worlds in Education Roundtable (twice – I mean I have two avatars there) and the subject is what’s new this academic year in virtual worlds. For the first time in a long time I’m not involved in any teaching inworld, which is a disappointment, and quite worrying that this might imply an downward trend in the use of them in education. Some of the others around the table are doing stuff though, and this one looks very interesting .. I’ll paste the entire course info here in case anyone is.

++++++++++++++++++++++++++++++++++++++++++++++++++++++

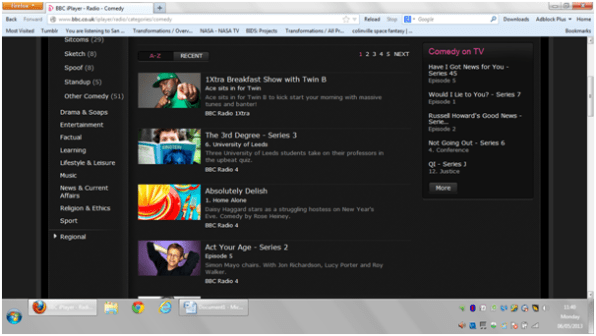

This fall a new kind of course will be taught t by 15 institutions of higher learning. The courses are all connected on the theme of feminism and technology, and the general public is welcome to participate through independent collaborative groups. This is an invitation to join a discussion group in Second Life, which will meet on Sundays at 2pm to discuss the weekly themes of the course.

I hope you can join us, and if you know someone who might be interested, please forward this message. More information is below.

WHAT:

A discussion group revolving around the FemTechNet Distributed Open Collaborative Course on feminism and new technologies Please see the press release for this collaborative course below. More information can be found on the website: http://femtechnet.newschool.edu/docc2013/

DATE: September 29 – December 8

WHEN:

Sundays at 2pm Eastern Daylight/Standard Time ( 11 am Pacific, 7pm GMT). Check the Minerva OSU Calendar for cancellations or date changes: http://elliebrewster.com/minerva/minerva-calendar/

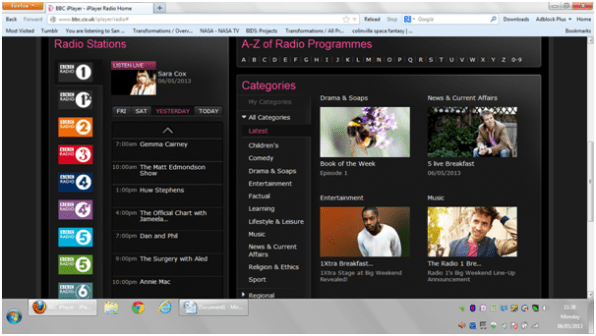

WHERE:

The discussions will be held in the virtual world Second Life, in the Ohio State virtual classroom, Minerva OSU. To find Minerva OSU: Simply type Minerva OSU into the Second Life address bar.

If you are new to virtual worlds and would like to join an orientation session in the week preceding the first meeting, please contact Ellie Brewster

If you are exploring in Second Life and need help, please IM Ellie Brewster.

TOPICS:

With the approval of the group, we will follow the weekly video dialogues that accompany the course (schedule of videos is here: http://femtechnet.newschool.edu/video-dialogues-topics-schedule/ ). Other suggestions for topics are welcome.

WHO CAN JOIN:

Anyone can join the discussion; however, there will be a weekly limit of 35. If you cannot gain access to the classroom, it will be because the room is full. If you are interested in leading a second discussion group at a different time, please let me know.

HOW TO JOIN:

For inclusion in the e-mailing list (no more than one e-mail per week), and membership in the Second Life group (necessary for admission), please send a request to this address with DOCC Mailing List in the subject line. Please include your avatar name.

For Immediate Release

Feminist Digital Initiative Challenges Universities’ Race for MOOCs

Columbus, OH, August 21, 2013: FemTechNet, a network of feminist scholars and educators, is launching a new model for online learning at 15 higher education institutions this fall. The DOCC, or Distributed Open Collaborative Course, is a new approach to collaborative learning and an alternative to MOOCs, the massive open online course model that proponents claim will radicalize twenty-first century higher education.

The DOCC model is not based on centralized pedagogy by a single “expert” faculty, nor on the economic interests of a particular institution. Instead, the DOCC recognizes, and is built on, expertise distributed among participants in diverse institutional contexts. The organization of a DOCC emphasizes learning collaboratively in a digital age and avoids reproducing pedagogical techniques that conceive of the student as a passive listener. A DOCC allows for the active participation of all kinds of learners and for the extension of classroom experience beyond the walls, physical or virtual, of a single institution. FemTechNet’s first DOCC course, “Dialogues in Feminism and Technology,” will launch fall 2013.

The participating institutions range from small liberal arts colleges to major research institutions. They include: Bowling Green University, Brown University, California Polytechnic State University, Colby-Sawyer College, CUNY, Macaulay Honors College and Lehman College (CUNY), The New School, Ohio State University, Ontario College of Art and Design, Pennsylvania State University, Pitzer College, Rutgers University, University of California San Diego, University of Illinois Urbana-Champaign and Yale University.

DOCC participants, both online and in residence, are part of individualized “NODAL courses” within the network. Each institution’s faculty configures its own course within its specific educational setting. Both faculty and students will share ideas, resources, and assignments as a feminist network: the faculty as they develop curricula and deliver the course in real time; the students as they work collaboratively with faculty and each other.

At Ohio State, the course will be taught in the Women’s, Gender, and Sexuality Studies by Dr. Christine (Cricket) Keating. The course, “Gender, Media, and New Technologies,” will be offered on the undergraduate level. Keating is a recipient of the 2011 Alumni Award for Distinguished Teaching. This course takes as its starting point the following questions: How are gender identities constituted in technologically mediated environments? How have cyberfeminists used technology to build coalitions and unite people across diverse contexts? How are the “do it yourself” and “do it with others” ethics in technology cultures central to feminist politics? Juxtaposing theoretical considerations and case studies, course topics include: identity and subjectivity; technological activism; gender, race and sexualities; place; labor; ethics; and the transformative potentials of new technologies. The course itself is a part of a cutting-edge experiment in education, culture, and technology. It is “nodal” course within a Distributed Online Collaborative Course (DOCC). In this course, we will collaborate with students and professors across the U.S. and Canada to investigate issues of gender, race, and techno-culture.

These dialogues are also anchored by video curriculum produced by FemTechNet. “Dialogues on Feminism and Technology” are currently twelve recorded video dialogues featuring pairs of scholars and artists from around the world who think and reimagine technology through a feminist lens. Participants in the DOCC — indeed, anyone with a connection to the web — can access the video dialogues, and are invited to discuss them by means of blogs, voicethreads and other electronic media. Even as the course takes place, students and teachers can plug in and join the conversation. Through the exchanges and participants’ input, course content for the DOCC will continue to grow. From this process emerges a dynamic and self-reflective educational model.